Expression Clone — Technique and Implementation

by hmaya 2007.5.22 {hmaya@163.com}

Key Word:Facial Expression, Maya® Plug-in, RBF NN, Morphing, Cloning

There are many ways to make facial expression, such as extracting expression data from video or image, manually creating and adjusting expression, or creating expression by instrument of expression capture. But the main problem of these methods is that people usually have to create one expression library for each character. And the animation data could not be shared and reused by other characters.

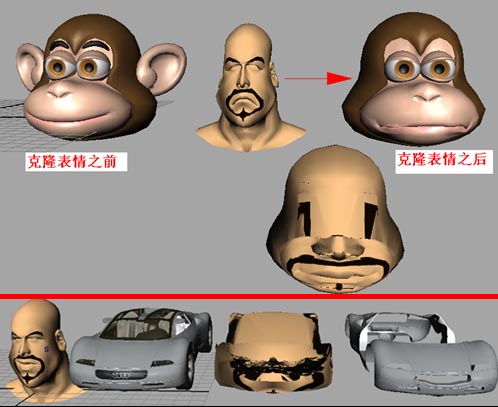

Expression cloning is a technique which reuses existing data, and transfer expression data between different models, this technique could greatly enhance the efficiency of producing facial expression.

After reading a lot of papers and books on Morphing and Facial Expression, a method of Expression Cloning was proposed by Jun-yong Noh and Ulrich Neumann. Because PNAI is based on Maya®, and Maya® is so popular in the field of 3D animation, the thesis aims to improve and extend existing algorithms of expression cloning, and to develop a plug-in based on Maya® C++ API.

With the knowledge of Artificial Intelligence, Computer Graphics and Maya® software kit, and RBF, I implemented an algorithm of expression cloning and a Maya® plug-in. Combined the Blend Shape technology of Maya®, I extended and pushed the application of this algorithm. The plug-in can not only clone but also rebuild and combine expression. After rebuilding and combining, we could generate an expression at the target model which has a different order from the original expression of the source model. Moreover, we could modify the degree of animation by Blend Shape. These offer more freedom and more choices for users, so the plug-in can be well used by more artists, and will be more popular in field.

To implement an algorithm of expression cloning and create a Maya® plug-in, there are 2 main challenges to me.

■ 1. How to transfer the expression data of source model to target model? There are many differences between source model and target model, such as different count of vertices, different size of model, different topology and structure, different direction and range of muscle movement, etc.

One method is computing the motion vector of every vertex, then adjust motion vector and apply it to vertices in target model. With motion vectors, we could compute the new position of every vertex in target model, and they will have similar movement with source model, so target model will have similar expression with source model.

To adjust motion vectors, we have to morphed source model to target model, then we could build a transform matrix for every vertex in source model, and these transform matrix could be used to adjust motion vectors which will be applied to target model. Some details are listed as follow:

Firstly, I morphed source model to target model with the powerful ability of interpolation and classification by Radial Basis Functions Neural Networks (RBF NN). To get the samples and target data which trained by RBF NN, we have to mark some corresponding vertices at source model and target model. For example, there is a vertex (called P) at the top of nose in source model, and there is a vertex (called P’) at the top of nose in target model, P and P’ are a pair of corresponding vertex. And the coordinate of P will be inputted to RBF NN as samples, the coordinate of P’ will be inputted to RBF NN as target data. After many training based on samples and target data, RBF NN become a stable networks, and we get one group values of weight. With these weights, we could compute the new position of every vertex at source model, and morph source model to target model.

Secondly, we could build transform matrix after morphing source model to target model. We build the transform matrix based on local space and world space. By multiplying original motion vector with transform matrix, we get new motion vectors. Another problem is the different count of vertices between source model and target model, and new motion vectors couldn’t be applied to target vertices. Out solution is blending motion vectors with barycentric coordinates. To use barycentric coordinates, the faces of source polygon model must be triangle. Even though there are quadrate or other polygons in source model, after a process which could be called “Triangulate”, every face in source polygon model will become triangle.

Finally, record the positions of target model vertices at different time by keyframe, and we could get similar movement as source model.

It is motioned that we have to mark some corresponding vertices at source model and target model. How to mark corresponding vertices? It’s the second main challenge to me.

■ 2. How to mark corresponding vertices? Every vertex in Maya® will have an index ID, such as “pCylinder1.vtx[34]”. We could distinguish vertices by index ID, but it’s hard to operate if we have many vertices to mark.

My method is set blind data! Blind data is a type of info which could be added to vertex or face, and blind data could be identified by other software such as game engine.

Blind data in Maya® could be set and queried. I have written a MEL script to show a windows UI in Maya®, then every one could easily set blind data for selected vertices. Moreover, Maya® supplied a C++ API which called “getDoubleBlindData()” in MFnMesh class, we could query blind data of any vertex through this API.

If we set different blind data for different vertex, we could easily identify vertices by querying their blind data. With help of my MEL script, it’s easy to mark corresponding vertices.

After solved the two main problems, I developed a plug-in based on Maya® C++ API.

There is a technology in Maya® which is called “Blend Shape”. Blend Shape could be well applied to my plug-in.

With my expression cloning plug-in, I could transfer expression data from source model to target model frame by frame. If we clone a frame sequence, we could get expression animation; If we clone only one frame, we could get an expression pose. Suppose we have a source model, and there is facial animation info in it. Certainly, we could transfer its facial animation to target model by cloning a frame sequence. More important, we could extract several useful expressions, and transfer it to target model by specifying several frames. Then we could get several useful expression poses.

If we got several useful expression poses, it’s easy to create new facial animation with the Blend Shape technology! It’s mean that we could not only clone expression, but also rebuild expression. Combined the Blend Shape technology of Maya®, we could get new facial Sequence! For example, if a facial sequence of source model is “standard -> amazed -> envious -> sad”, with my plug-in and blend shape technology of Maya®, we could rebuild new facial animation on target model, for example, we could get a facial sequence “envious -> standard -> amazed -> sad”. And this extended the application of expression cloning technique, offered more freedom and more choices for users.

What’s more, with the aid of Blend Shape, we could also modify the degree of facial animation. For example, there is an obvious smile in source model. We could transfer the smile expression to target model, and adjust the degree of smile, finally, we could get a light smile in target model.

This plug-in could greatly enhance the efficiency of producing facial expression, especially there are many characters in one film, and each one must have facial animation. We only need to create facial animation for just one character, and clone it to other characters.

Because these features of this plug-in, I’ve pushed the application of existed algorithm, and it’s convenient and easy to use. So the plug-in can be well used by more artists, and will be more popular in animation field.

表情克隆技术及实现

关键字:表情动画、Maya®插件、RBF神经网络、变形、克隆

现有的表情动画制作方法有很多,但大多是为每个角色单独创建一套表情库,表情动画数据被单个角色拥有,已有的动画数据无法在角色之间重用。如果可以重用已有动画数据,将一个角色的表情动画数据传递到一个新的角色上,即表情克隆,则可以实现动画数据的重用,大大降低动画师的工作量,简化表情动画的制作流程,提高生产力。

在查阅和对比了许多已有的Morphing技术和表情动画方面的文献之后, Jun-yong Noh和Ulrich Neumann提出的表情克隆算法能够较好的实现动画数据的重用,而且可以较好的运用到PNAI上。鉴于该试验平台是搭建在Maya®之上,而且Maya®在动画业界有着相当的用户群,所以本文致力于改进和拓展该算法的应用,并基于Maya® C++ API开发表情克隆插件。

基于人工智能、计算机图形学、Maya®软件包三大领域知识,在弄清楚关联点的标记、RBF变形、变换矩阵的构造、运动向量的传递等一系列核心技术后,本文用Maya®插件的形式实现了Jun-yong Noh和Ulrich Neumann的算法,并结合Maya®的Blend Shape技术,向前大大推进和拓展了该算法的应用。目前,该插件不仅可以克隆表情动画,重用现有动画数据,生成和源动画一样顺序的目标表情动画;还可以从源动画数据中抽取有用的部分,进行重构和组合,生成任意顺序的目标表情动画。同时,结合Blend Shape,还可以调整某个表情的程度,如将狂笑调整成微笑。这些开拓性的工作为用户提供了更多的选择和自由,扩展了该表情克隆技术的应用,使得该技术可以更好的被业界接受和运用,为更多的艺术家服务。

我的联系方式: 【hmaya@163.com】

看到很多地方需要收费才能下载我的论文,我决定把我的论文放在这里公开供大家免费下载. 既然是论文,就是要共享,供后人参考和研究使用.<商业用途者除外,如有特别使用,请联系本人, hmaya@163.com>

点击下载此文件

Facial Expression Clone

看完不过瘾?点此向作者提问